What Does It Mean to Hide a Post on Reddit

It's hard to shock Collin Williams, a volunteer moderator for r/RoastMe, a comedic insult forum with two.3 meg members. Simply nearly a year ago he was surprised by something in his own comment history.

The ghosts of posts he had attempted to remove were still at that place.

Per 2020 numbers, 52 one thousand thousand people visit Reddit's site each twenty-four hours and peruse some three million topic-specific forums, or subreddits. Those communities are kept on-topic (and in theory, consistent with Reddit's content policies), mostly thanks to homo volunteers and automated bots, collectively referred to as moderators or "mods."

Equally a moderator, Williams tends to a garden of posts and comments, a procedure that includes removing posts that break subreddit rules. Moderators set their own rules for what is postable in their ain forums but also play a role in enforcing Reddit'southward content policy.

On r/RoastMe, those moderator-created rules outlaw posting pictures of someone without their permission or of people under 18, for example. Simply even afterwards Williams removed offending posts from his subreddit, they were, somehow, withal viewable in his business relationship's comment history.

The moderator'southward unabridged history becomes this giant list … of everything they've removed and for what reason.

r/RoastMe moderator Collin Williams

And then, Williams had an epiphany. This probably wasn't a problem just on his subreddit.

Williams was right. Across Reddit, when a moderator removes a post, the mail service is unlisted from the subreddit'southward principal feed. Only images or links within that post don't actually disappear. Posts removed by moderators are even so readily bachelor to anyone on Reddit in the comment history of the moderator who flagged it—complete with an explanation of the rule information technology violates—or to anyone who retained a direct URL to the post.

"The moderator'southward entire history becomes this giant list—basically an index—of everything that they've removed and for what reason. Reddit kind of accidentally created this giant alphabetize of stuff that humans accept flagged every bit existence inappropriate on the site," Williams said.

This is happening across subreddits. You can nonetheless find moderator-removed video game memes or reposts (a major Reddit faux pas) of dog photos. But it likewise happens on subreddits dedicated to posting sexual or sexualized content. In those cases, this loophole allows posts to persist on the site, even though they violate Reddit's content policy.

Credit:Reddit.com

For example, the subreddit-specific rules of r/TikTokThots, a subreddit dedicated to sexualized TikTok videos, explicitly instruct users non to post videos of people presumed to exist under 18, in keeping with the Reddit-wide content policy against "the posting of sexual or suggestive content involving minors." Just The Markup was still able to observe removed posts in moderators' annotate histories in which sure images, flagged by moderators for depicting presumed minors, were still alive and visible.

In the more risqué subreddit r/TikTokNSFW, moderators removed at least one nude image considering it "contains minors," co-ordinate to moderator judgment, yet the image is still visible on the site through the removing moderator's comment history.

This is thus far a huge miss past the engineering and UX team….

Sameer Hinduja, Cyberbullying Research Center

"This is thus far a huge miss by the engineering and UX team and must be rectified immediately in order to prevent new or recurrent victimization of those featured in the content," said Sameer Hinduja, co-director of the Cyberbullying Research Center and a professor of criminology at Florida Atlantic Academy.

Williams privately reached out to Reddit'south r/ModSupport, a point of contact between Reddit administrators (i.e., employees) and volunteer moderators, on Sept. 15, alerting them to this issue. He later received a DM from a Reddit administrator saying, "Thanks for sending this in. Nosotros'll take a expect!" Just every bit of publication time, there had been no modify.

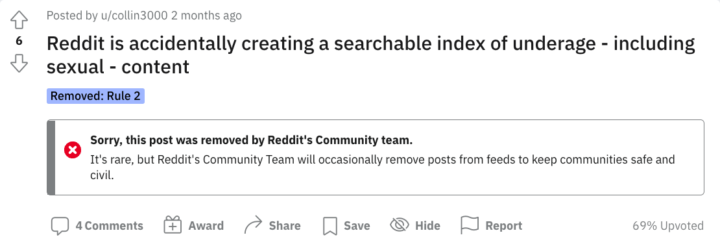

Virtually 1 month afterwards, Williams also posted publicly in Reddit's r/ModSupport to warning other moderators to the potential problem. Reddit'due south Community Team removed his post.

Credit:Reddit.com

Reddit has not responded to multiple requests for annotate for this story.

↩︎ link

Grappling with Content Removal

Reddit has continually grappled with how to police its endless list of online communities. This has played out most publicly in Reddit's NSFW (non condom for work) content, particularly nude or sexualized images.

Before 2012, Reddit did non have a publicly posted policy explicitly banning content that sexualized minors, simply the company did report "articulate cut cases" of kid sexual abuse material (CSAM) to the National Eye for Missing and Exploited Children. When it came to what Reddit termed "legally grey" areas surrounding CSAM, Reddit initially dealt with them on a "example by example footing." But eventually Reddit took more sweeping action to curb CSAM on its platform. For instance, Reddit administrators banned the controversial community r/jailbait after a nude pic of a fourteen-twelvemonth-old girl was posted on the subreddit.

By 2012, Reddit had reformed its content policy: All subreddits that focused on the sexualization of children were banned. But in 2014, The Washington Post suggested little had substantively changed, with "every kind of filth and depravity you can conceive of—and probably quite a chip that you tin can't" withal alive on the site.

Reddit's current policy bans whatsoever sexual or suggestive content involving minors or anyone who even appears to be under eighteen. Users and moderators tin written report images sexualizing minors to Reddit administrators, who volition remove the posts themselves and and so report blatant CSAM to the National Center for Missing and Exploited Children.

Yet, Reddit is currently facing a class-action lawsuit related to alleged lax enforcement of CSAM policy. In a complaint filed in April 2021 in the U.S. District Courtroom for the Primal District of California, one adult female defendant the company of allowing an ex-boyfriend to repeatedly mail service nude videos and images of her as a teenager.

"Reddit claims it is combating kid pornography by banning certain subreddits, but but later they gain enough popularity to garner media attention," the complaint reads.

Reddit filed a movement to dismiss this example, citing Section 230 of the Communications Decency Act, which protects websites against liability from content posted by third parties. A court granted that motion in Oct, and the plaintiff has since appealed to the U.South. Court of Appeals for the Ninth Excursion.

While Reddit has banned communities that violate its rules, the architecture of Reddit's site itself allows content deemed by moderators to violate Reddit's policies to persist in a moderator's annotate history. Hinduja said this loophole can present problems for people struggling to remove sensitive content from Reddit'due south platform.

"It clearly is problematic that sexual content or personally identifiable data which violates their policies (and in the history, articulate explanation is typically provided as to why the cloth transgresses their platform rules) still is bachelor to see," he said.

At that place are some cases in which removed posts don't have accessible images. If users delete their own content (if prompted by a moderator, or of their own volition), the images seem to truly disappear from the site, including any annotate history. If a user doesn't have this action, there's not much moderators can do to truly delete content that breaks Reddit'southward rules, other than study it to Reddit.

Reddit's guidelines for moderators acknowledges that moderators can remove posts from subreddits but don't accept the ability to truly delete posts from Reddit. Moderators, to some extent, are aware of this fact.

[The best a moderator can do is] click the study button and promise the overlords notice it.

u/LeThisLeThatLeNO, Reddit moderator

A moderator for six Reddit communities, u/manawesome326, told The Markup that removing posts as a moderator can prevent posts from showing upwardly in subreddit feeds, but it "leaves the trounce of the postal service attainable from the postal service'southward URL," which makes it accessible via moderator histories.

Moderators of more risqué subreddits are also aware that they can't truly remove content. A moderator for r/TikTokNSFW, u/LeThisLeThatLeNO, also noted to The Markup that removed posts on Reddit are still viewable and that the best a moderator can practise is to "click the study button and hope the overlords observe it."

"In my experience (over 1 yr ago) making reports almost always led to nada, unless a whole avalanche of reports go in," the moderator said via Reddit.

Reddit has previously attempted to limit who can view removed content. In a post in r/changelog, a subreddit defended to platform updates, Reddit administrators wrote, "Stumbling across removed and deleted posts that still have titles, comments, or links visible can be a confusing and negative experience for users, especially people who are new to Reddit."

The platform noted it would allow only moderators, original posters, or Reddit administrators to view posts that had been removed that would take previously been accessible from a directly URL. The limits applied only to posts with fewer than two upvotes or comments.

Many moderators, though, had major bug with this change, because subreddits often referred to removed content in conversations and moderators have constitute it useful to view accounts' by posts (even user-deleted ones) to investigate patterns of bad behavior.

The trial was discontinued in late June. Reddit did not respond to questions asking for specifics on why the trial had been discontinued.

Meanwhile, Williams has tried to cake the road most Redditors might use to find deleted posts: his comment history. He has started to privately message users who post rule-breaking content on his subreddit to explain why it was removed rather than leaving a public comment. This method, he hopes, will brand removed posts harder to find.

If anyone had happened to squirrel away a straight link to a removed post, however, the offending images are yet accessible.

↩︎ link

Moderation Versus "Platform Cleanliness"

Although Reddit does have the power to truly delete posts, it'due south nonetheless the volunteer moderators, existent and automated, who do the bulk of content direction on Reddit. (Posts removed by bots also even so contain visible images if viewed through the bot's comment history.)

According to Reddit's 2020 transparency report, Reddit administrators removed 2 percent of all content added to the site in 2020, whereas moderators, including bots, removed iv percentage of content.

Loopholes like this i create a greyness area when it comes to content moderation, said Richard Rogers, the chair in New Media and Digital Culture at the Academy of Amsterdam.

While moderators are a starting time line of defense responsible for keeping prohibited content off of their subreddits, this loophole means they have little power to enforce what Rogers calls "platform cleanliness."

"And then the responsibility shifts from the individual subreddit and the moderators to the platform," Rogers said to The Markup. "So seemingly, here, we have a disconnect between those two levels of content moderation."

In the meantime, images in removed posts will go on to linger in Reddit'southward corners, just a click abroad from being discovered.

Source: https://themarkup.org/news/2021/12/16/the-shadows-of-removed-posts-are-hiding-in-plain-sight-on-reddit

0 Response to "What Does It Mean to Hide a Post on Reddit"

Post a Comment